Following the post, we chose Open3D to better understand, visualize, and prepare datasets for future reconstructions. Our motivation to delve deeply into this was partly due to challenges with apps such as NerfCapture or SpectacularAI, which don’t readily integrate with processing libraries. Understanding the data will allow us to continue incorporating new state-of-the-art algorithms, such as PromptDA. In this exploration, we use raw data generated by the Stray Scanner App (using ARkit) with datasets from an iPhone15 Pro. As is often the case in engineering, mathematics is essential. Let’s describe and visualize each data component

Camera_matrix.csv

This file details the camera’s intrinsic parameters, assuming a pinhole model of the camera. These values are calibrated for full-sized RGB images. Therefore, if the images are resized, the intrinsic parameters must be adjusted accordingly.

Odometry.csv

Given raw data (timestamp, frame, x, y, z, qx, qy, qz, qw), convert quaternions (qx, qy, qz, qw) to a spatial transformation matrix () for Open3D using R=Rotation.from_quat(quaternion).as_matrix() from scipy.

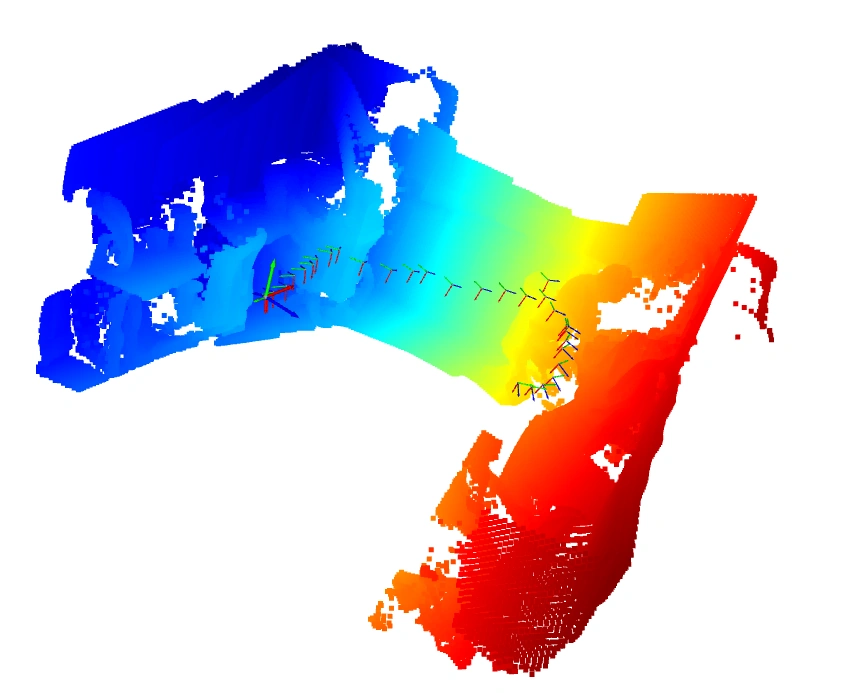

To show this frame in open3d it is used o3d.visualization.Visualizer() using a mesh of type o3d.geometry.TriangleMesh.create_coordinate_frame() adding the respective mesh.transform.

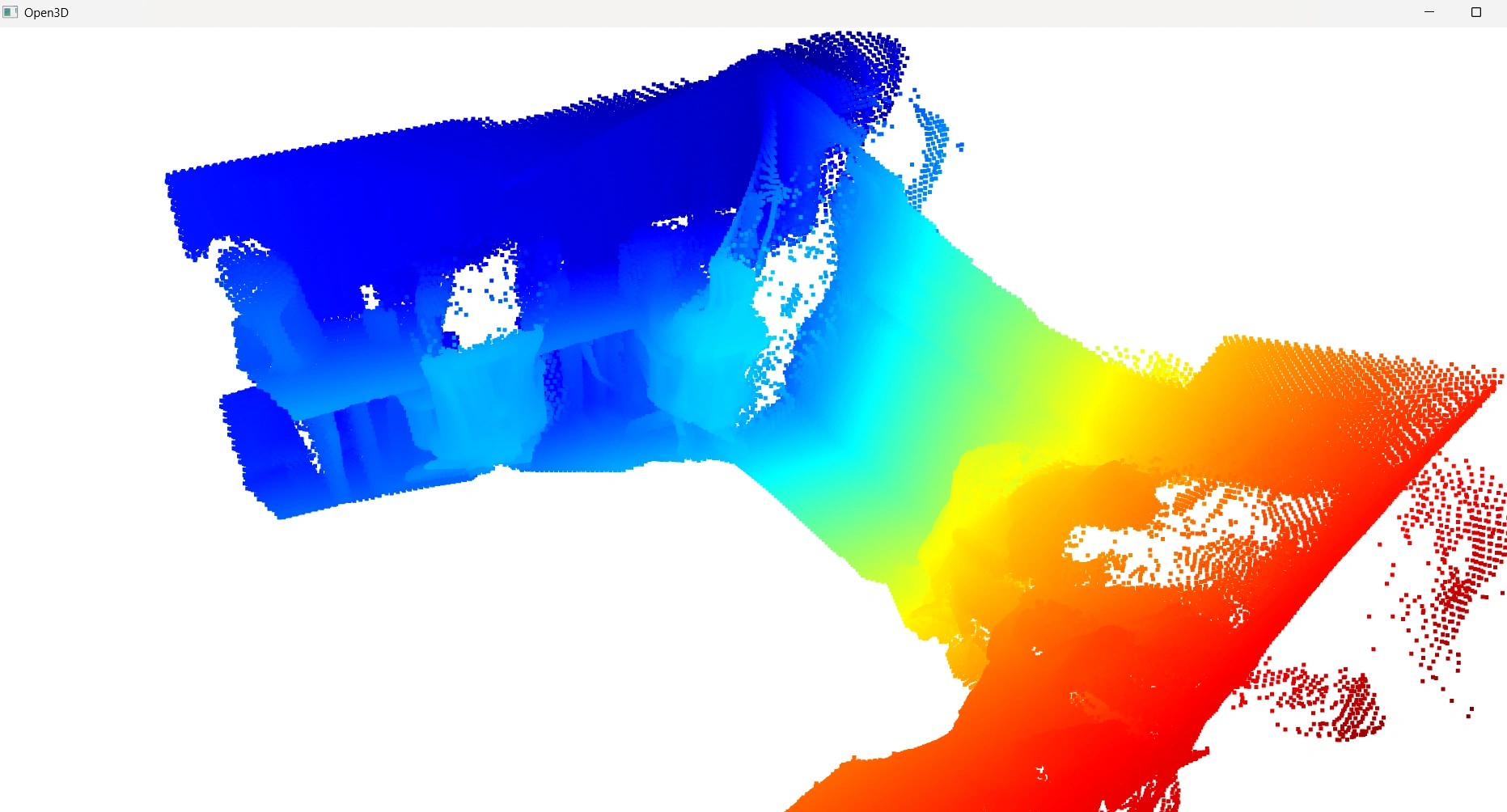

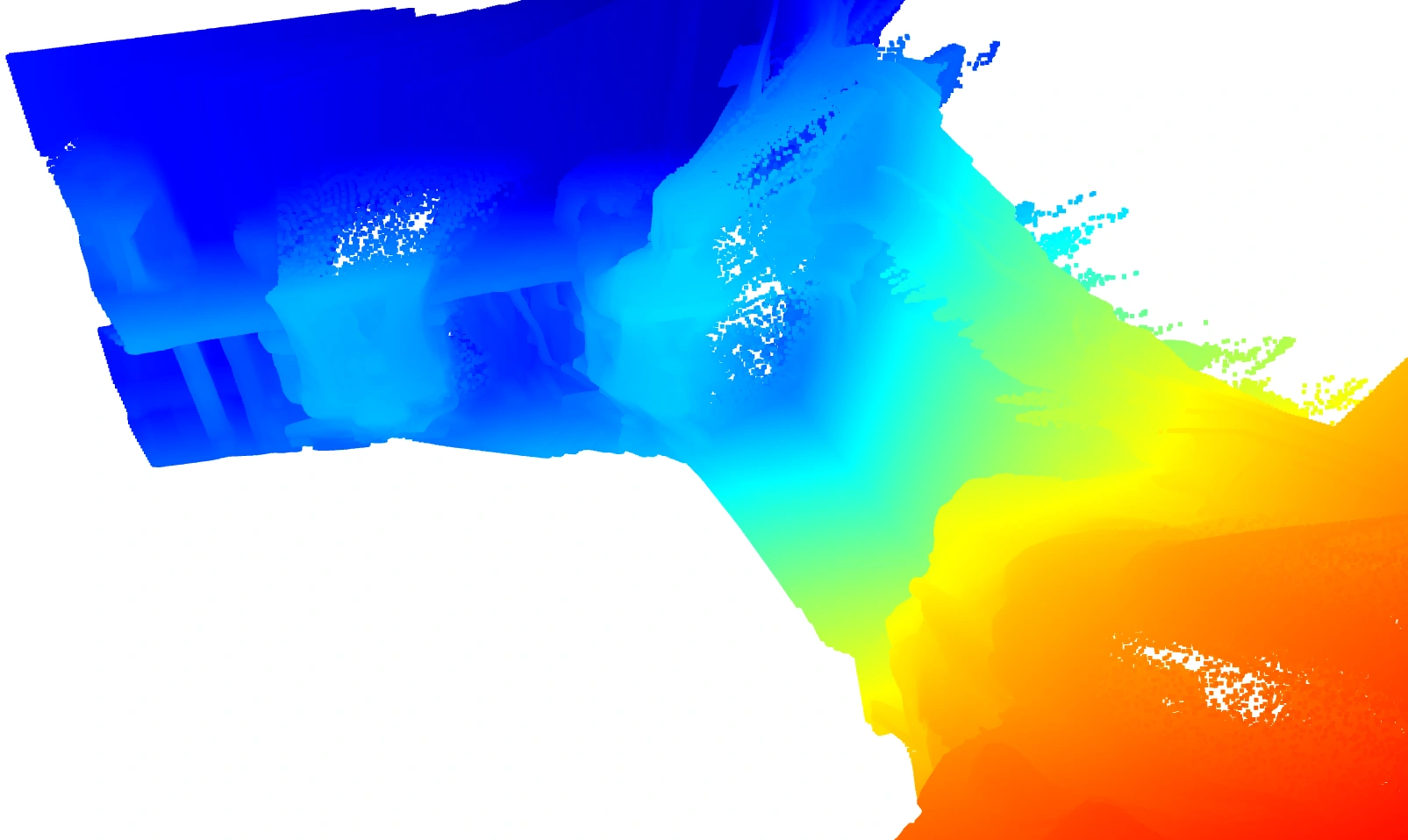

Depth images are RGB images, and each app has a specific depth scale (1000 for Stray Scanner). Therefore, we utilized open3d.geometry.create_point_cloud_from_depth_image, using the inverse of the odometry (T_{cw}) as extrinsic parameters and the camera model as intrinsic parameters. Subsequently, we processed the point cloud, beginning with uniform down-sampling, removal of outliers, clustering, and plane segmentation. We encourage you to review the PointCloud documentation at PointCloud documentation.

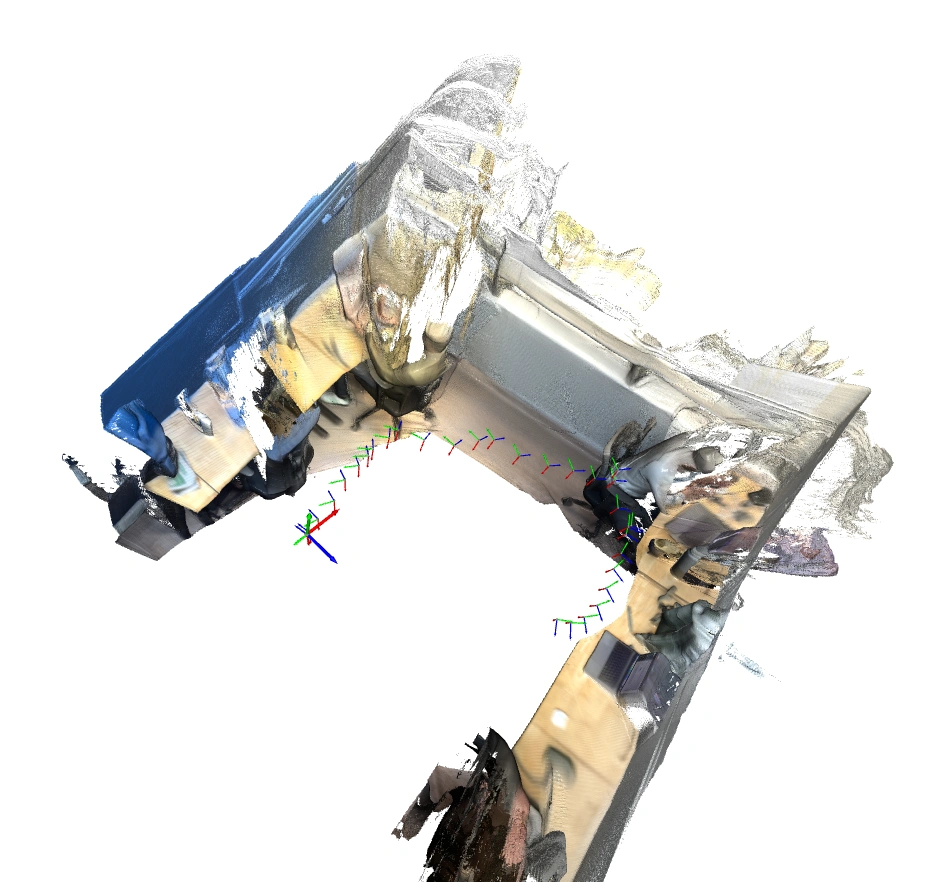

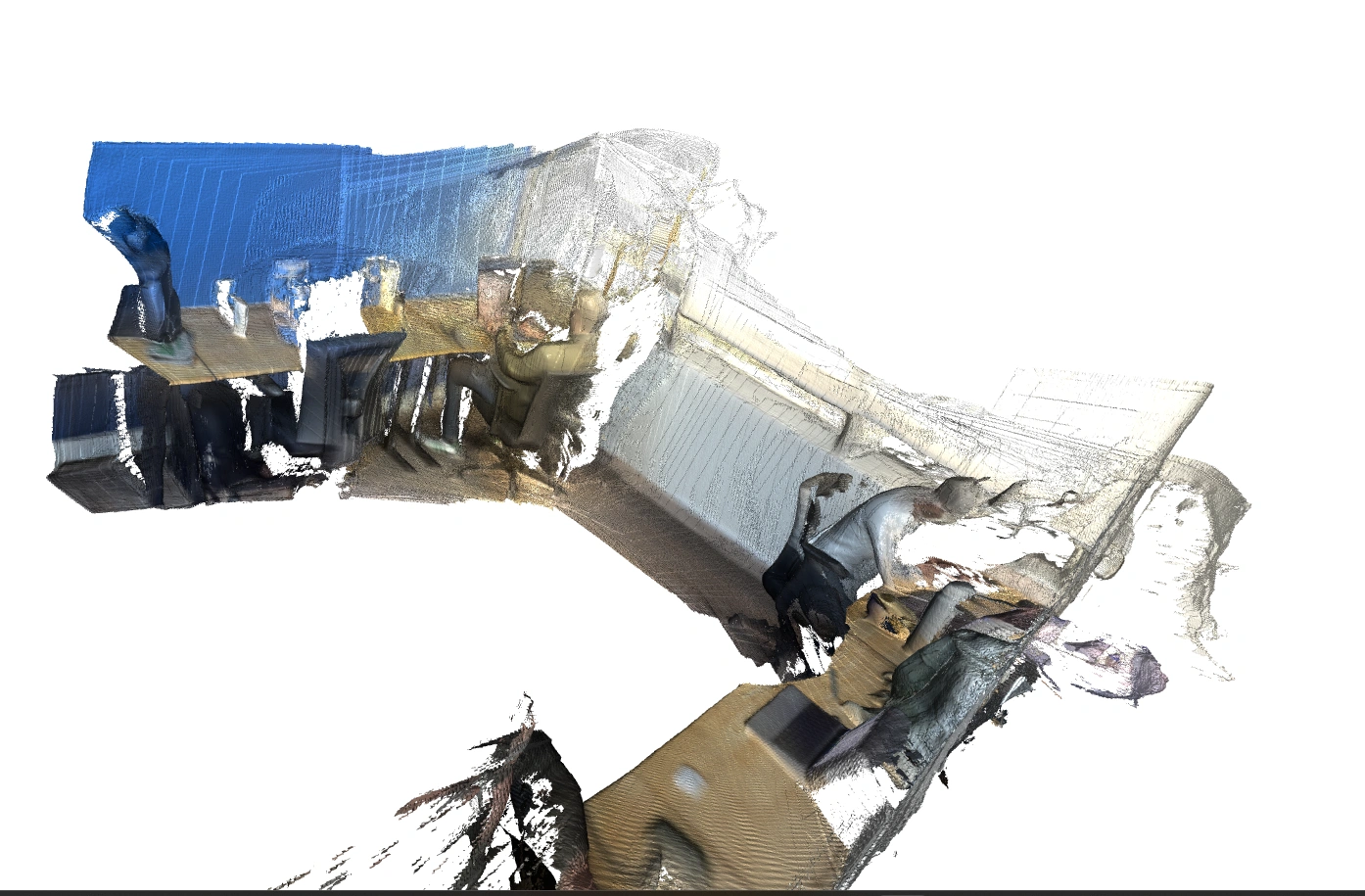

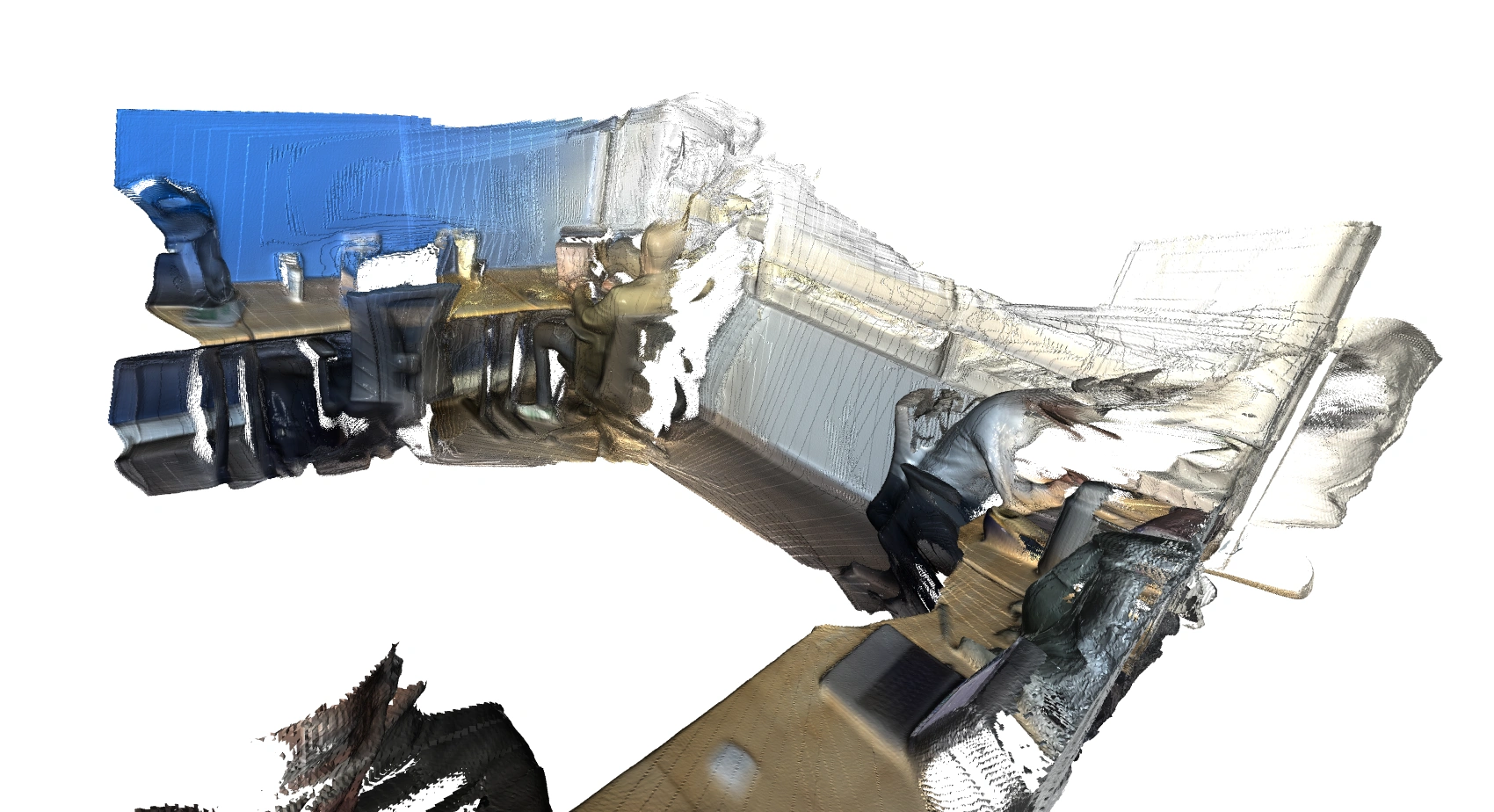

Within the app, images are stored in an MP4 video. FFmpeg was employed to extract individual frames, which were subsequently scaled to match the resolution of the point cloud image. Following this, the RGBDImage.create_from_color_and_depth function was utilized to generate the RGB-D image, which was then integrated using a ScalableTSDFVolume object alongside the intrinsic and extrinsic matrices.

Our next step was to enhance the depth image resolution using PromptDA , which is trained to prompt Depth Anything for low-resolution images, aiming to improve the depth image’s resolution from iPhone captures. Although the visual improvements may not be immediately apparent, further point cloud processing and improved odometry approximations could leverage this significantly denser point cloud data (up to 50x increase).

| Original | With PromptDA at 1008x756 depth image |

|---|---|

|  |

| Original | With PromptDA at 1008x756 depth image |

|---|---|

|  |

Conclusion

This exploration of the raw RGB-D data from an iPhone, captured using the Stray Scanner app, has provided a clearer understanding of the data structure and its components, such as camera intrinsics and odometry. By using Open3D for visualization and processing, we successfully manipulated point clouds, integrated RGB images, and experimented with increasing depth image resolution using PromptDA.

While the improvements from PromptDA were subtle in this initial stage, further refinement of odometry and point cloud processing may unlock the potential of the enhanced depth data. This hands-on experience paves the way for integrating more advanced algorithms and techniques for 3D reconstruction.