A few weeks ago, FOSDEM 2025 took place, and this edition introduced something exciting for the robotics community: the launch of a brand-new Robotics and Simulation Devroom.

For those unfamiliar with FOSDEM, it’s a two-day event organized by volunteers to promote the use of free and open-source software. This year’s edition included 1,118 speakers across 78 tracks. A notable change was the evolution of the Robotics BoF into a half-day devroom, featuring a mix of 5- and 20-minute lightning talks.

Many presentations are already available online and can be watched directly from their respective pages. Ekumen played an active role in this devroom, both as part of the organizing committee and as speakers, delivering both a long and a lightning talk.

Now, let’s dive into our main presentation: Accelerating robotics development through simulation.

Robotics and simulations

What is a robotic system?

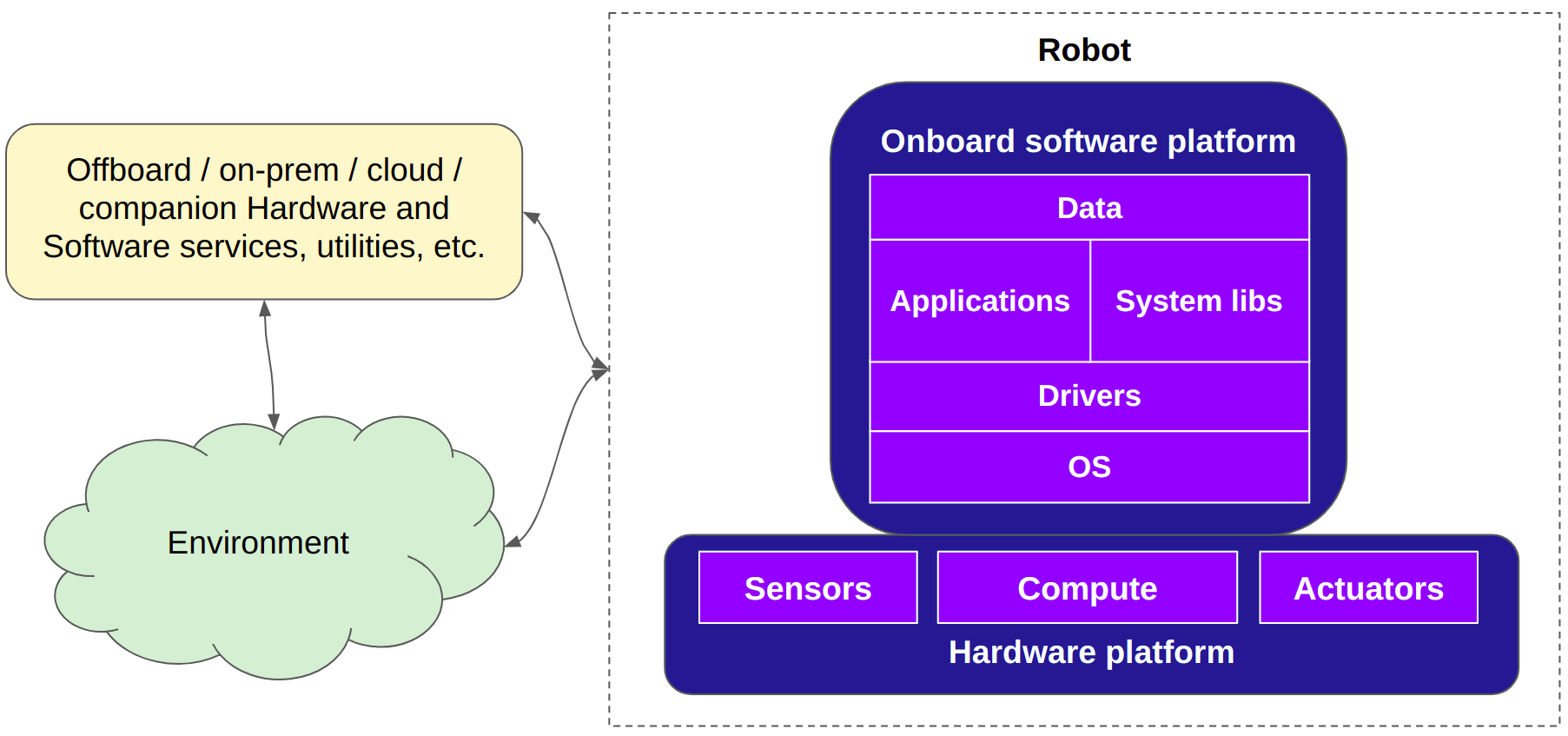

For the purpose of this analysis, it is convenient to start with some definitions that will help us later on. Using the supporting diagram below, a robot is a system composed of a hardware and an onboard software platform.

Pretty much every robot out there has some kind of compute (or multiple types) architectures connected to sensors and actuators. It is well known the sense-think-act model for designing robotic systems so this hardware model provides enough support for it.

On the software platform it is generally necessary to grow vertically from the OS all the way up to the application and data layers. In bettween, we can find different middleware or specific functional components to provide the robot with the necessary skills for the task at hand.

Robots interact with other robots or systems via the surrounding environment or a virtual environment based on (both physical and logical) communication protocols. One can understand that those interfaces are not necessarily the same robot to robot, application to application.

What is a simulator?

A simulator is an application that runs a scenario with a given model.

A scenario is a representation of a collection of systems and their environment which evolve throughout time.

A model is a representation of the rules that govern a process.

What is a robotic simulator?

A robotic simulator is an application which allows to model scenarios with robotic systems.

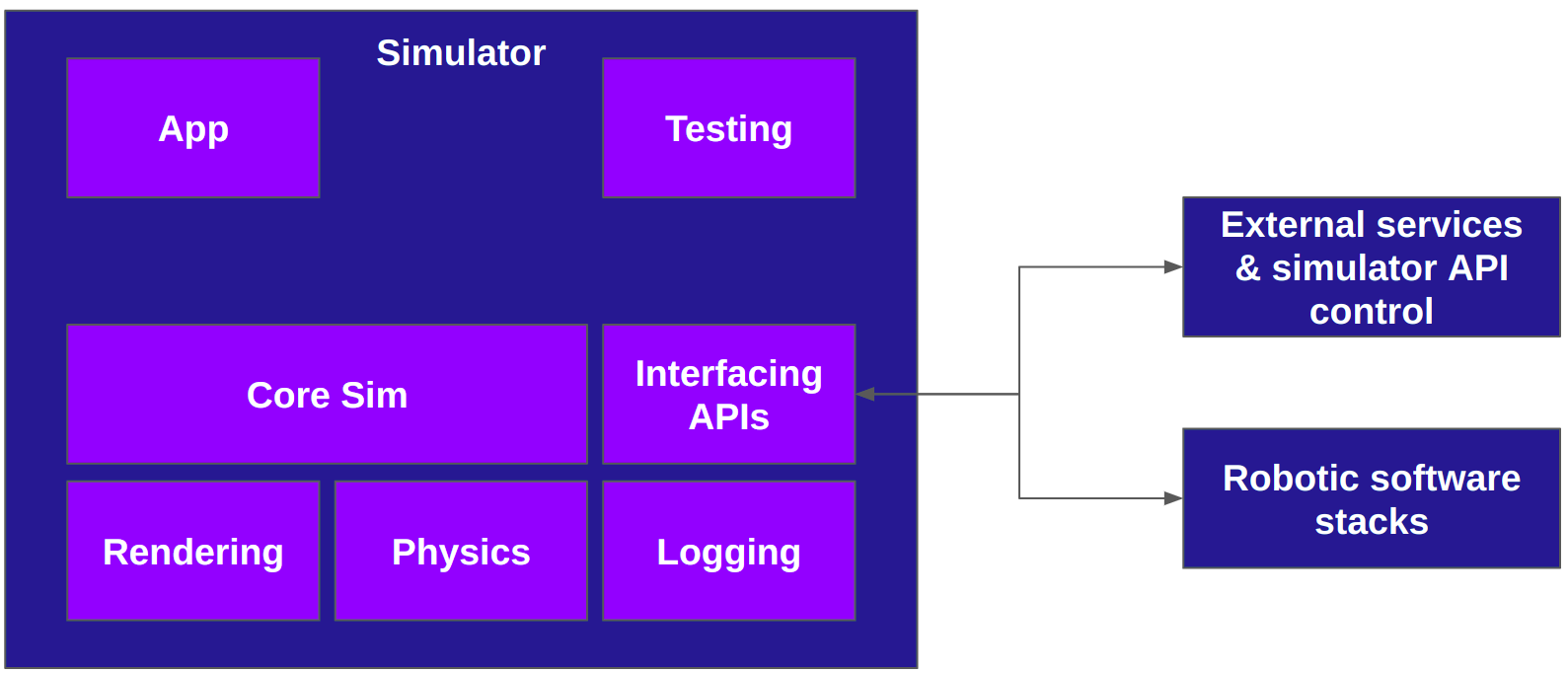

The diagram above shows the basic architecture of a robotics simulator. Let’s analyze its key components:

- Physics: it is the engine responsible for solving all the interactions between the different “moving parts”. In other words, it keeps the state of all the models involved in the scene an evolves it throughout time. This component typically involves the solution to kinematic/dynamic chains, rigid body trees and / or dealing with soft bodies. It is typically is CPU intensive, but in recent years we got some interesting advancements with Physics Engines that offload compute to GPUs making use of the parallelization capabilities.

- Rendering: it generates visual representations of the simulated scene. It does not need to necessarily output of the visual representations to a physical screen. Many times (e.g. in CI environments) it is desired to use virtual buffers where the content is rendered to. On top of that, the different features of the rendering engine enable faster and more detailed visualizations.

- Logging: actually this is logging and tracing. All the different components will require to log and trace their code such that users can understand what’s going on. However, we don’t limit ourselves to just that, as the scenario may be logged and played back many times to be used later on in tests or introspection acttivities.

On top of these three fundamental components, there exist:

- Core Sim: the core simulator component which glues the rendering, physics engine and logging libraries together, creates a state, and keeps track of the timeline of the simulation. One can introduce simulator control logic (playback from data / scenario evolution / asset control / event manager / etc.).

- Interfacing APIs: once the simulator is glued together we can either go for building ad-hoc applications where we inject the system under test within the simulator or we can use plugins / APIs / files to inject external functionality. For example, the definition of a scene can be done with a file (I want my robot here and a box there). Typically, this is where we’ll plug the robot software stack to the simulator to make it interact or external services to inject / extract information.

Finally:

- Testing: Facilitates automated testing of the robotic system within the simulation.

- Simulator App: Manages and controls the simulation, potentially providing a user interface.

What can we do with a robotic simulator?

As a bullet list:

- Model your robotic system and the software-hardware interactions.

- Test with physical stimuli your system and evaluate the software responses.

- Validate systems before releasing.

- Learn and tune controllers and other parameters in simulation before the physical scenario saving lots of resources.

- Generate data to gain trust on your system.

- Train controllers in simulation.

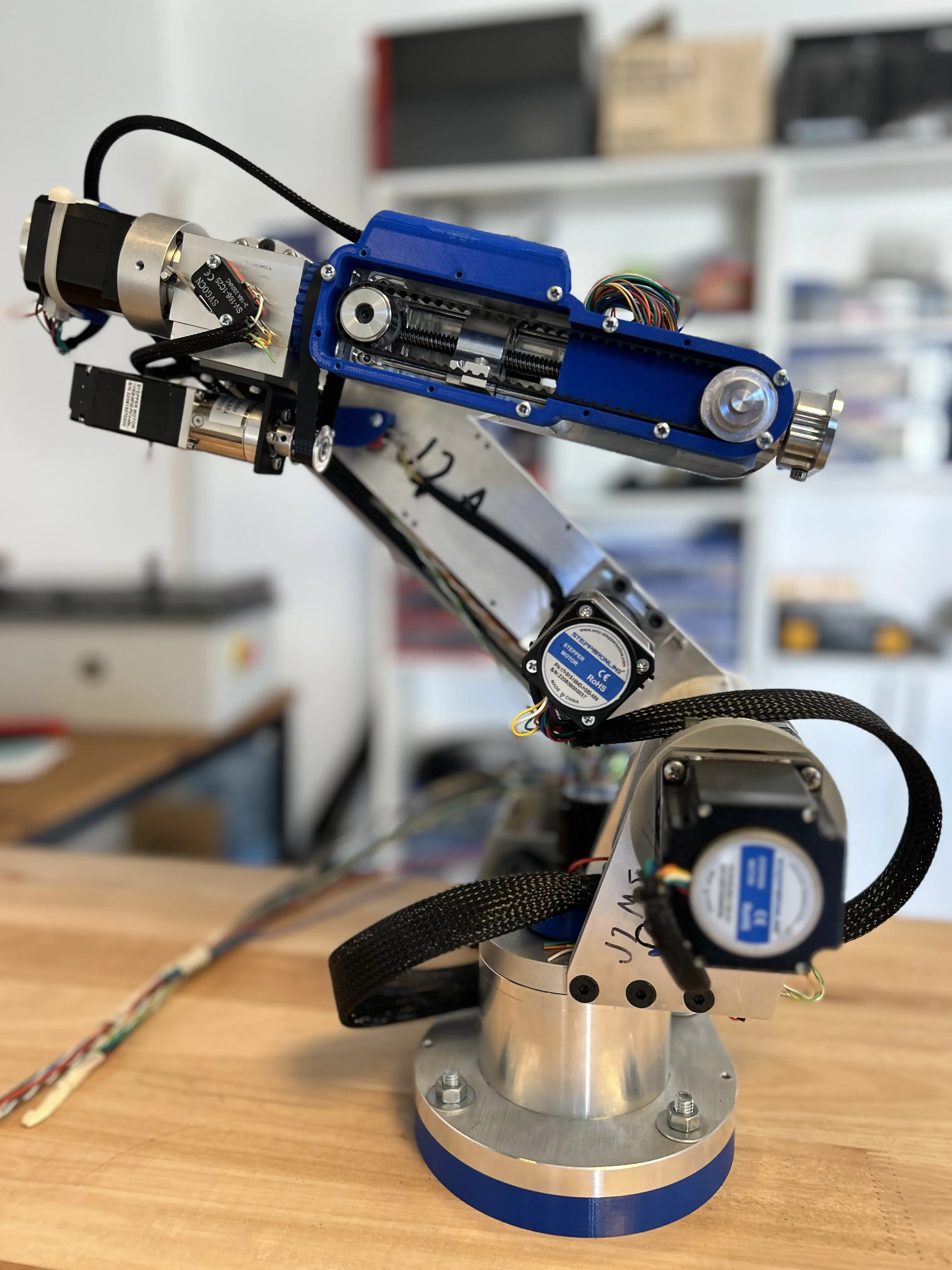

Imagine the case where you have a distributed workforce that develops on the same AR4 robotic arm.

With a simulator environment you can pretty much develop your automation solution and debug many of the logic and system issues before trying on the real hardware. Thus, reducing resource contention and increrasing effective use of time on the actual hardware.

Picking the right tool for the job

How to pick a robotic simulator?

We’ve found many different use cases and requirements for robotics simulators. Provided there is no technology out there covering all use cases at the same time, we are forced to ask:

-

Identify use cases

- Is it a testing tool?

- Is it a validation tool?

- Is it a development tool?

- Is it data gathering tool?

- Do you need a hardware-in-the-loop system?

-

Identify the type of robotic system

- Ground / Aerial / Maritime vehicles?

- Robotic arm or mobile robot or custom mechanism?

-

Identify scenarios

- Indoor vs outdoor.

- One vs multiple robots.

- Do you have automatisms?

- Do scenarios last long?

- Do you need determinism?

- Scene creation tools?

- Digital asset library requirements?

-

Identify physics requirements

- Kinematic vs dynamic vs complex dynamics?

- Which are the required sensors and actuators?

- Do you need soft bodies?

-

Identify rendering requirements

- Do you need rendering at all?

- Do you need photorealism?

- Will it run on the CPU or do you have GPU?

-

Identify the maintenance model

- Are you relying on the community?

- Is your entire stack moving forward with your technology decision?

Once you have answered these questions, you need to evaluate the possibilities out there satisfying your requirements.

This is not a simple task. You would need to simulate the same system in all possible simulators, learn how to do it, create and benchmark the solution. Sometimes, doing all that for just one simulator is what you all need.

At Ekumen, we faced this type of scenarios on a recurrent manner. This is why we decided to maintain multiple flavors of Andino simulators. The best of it, it is open source for everyone out there wanting to use it:

- Gazebo Classic: recently entered EoL but served for many years many robotic projects of the most diverse types.

- Gazebo: the evolution to Gazebo Classic, the general purpose and newly architectured Gazebo is the default option for ROS 2 integration systems.

- Webots: another great and simple general purpose robotic simulator with tons of language bindings, plugins and examples. Lightweight and monolithic, easy to learn makes it a great choice.

- 03DE: thanks to the O3DE gaming engine, this simulator offers modern rendering features robotic simulators.

- NVIDIA Omniverse Isaac: the powerful NVIDIA Omniverse is a great choice when having access to NVIDIA hardware for both classic robotics simulators, but also modern pipelines like AI Gym environments or even synthetic data pipelines.

- Flatland: it is a great choice for blazingly fast 2D simulations, e.g. multiple AMRs operating in a warehouse.

- MuJoCo: our R&D team is using it to learn physical properties and train controllers. It is generally used to extract accurate data and train systems with it.

Contact us

We invite everyone to watch the full FOSDEM presentation here and really hope you have found something new in this article, or perhaps sparked some interesting thoughts about robotic simulators.

Please reach out to us, we will be happy to discuss your next simulation project with your team.